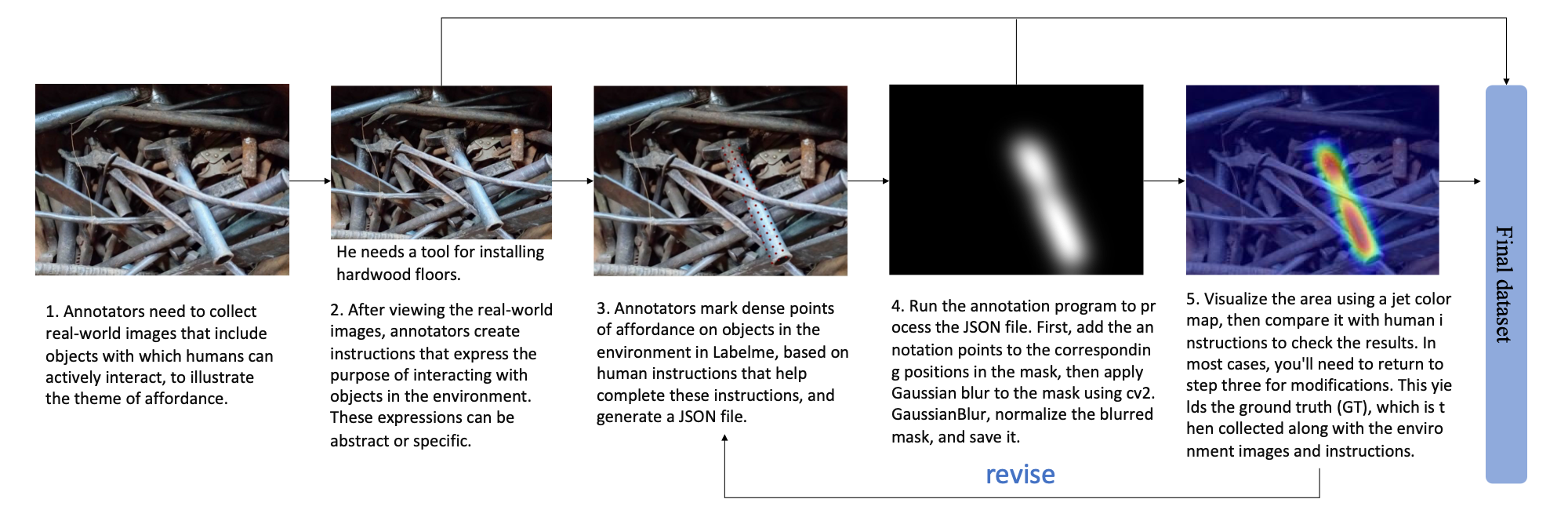

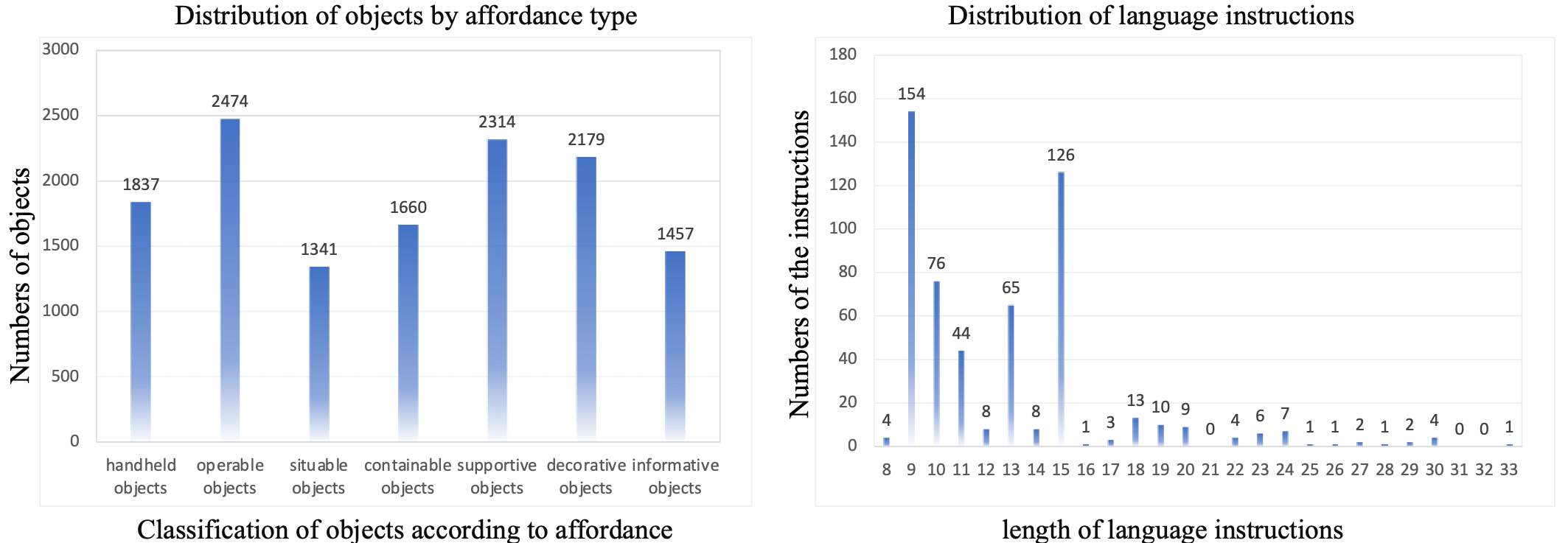

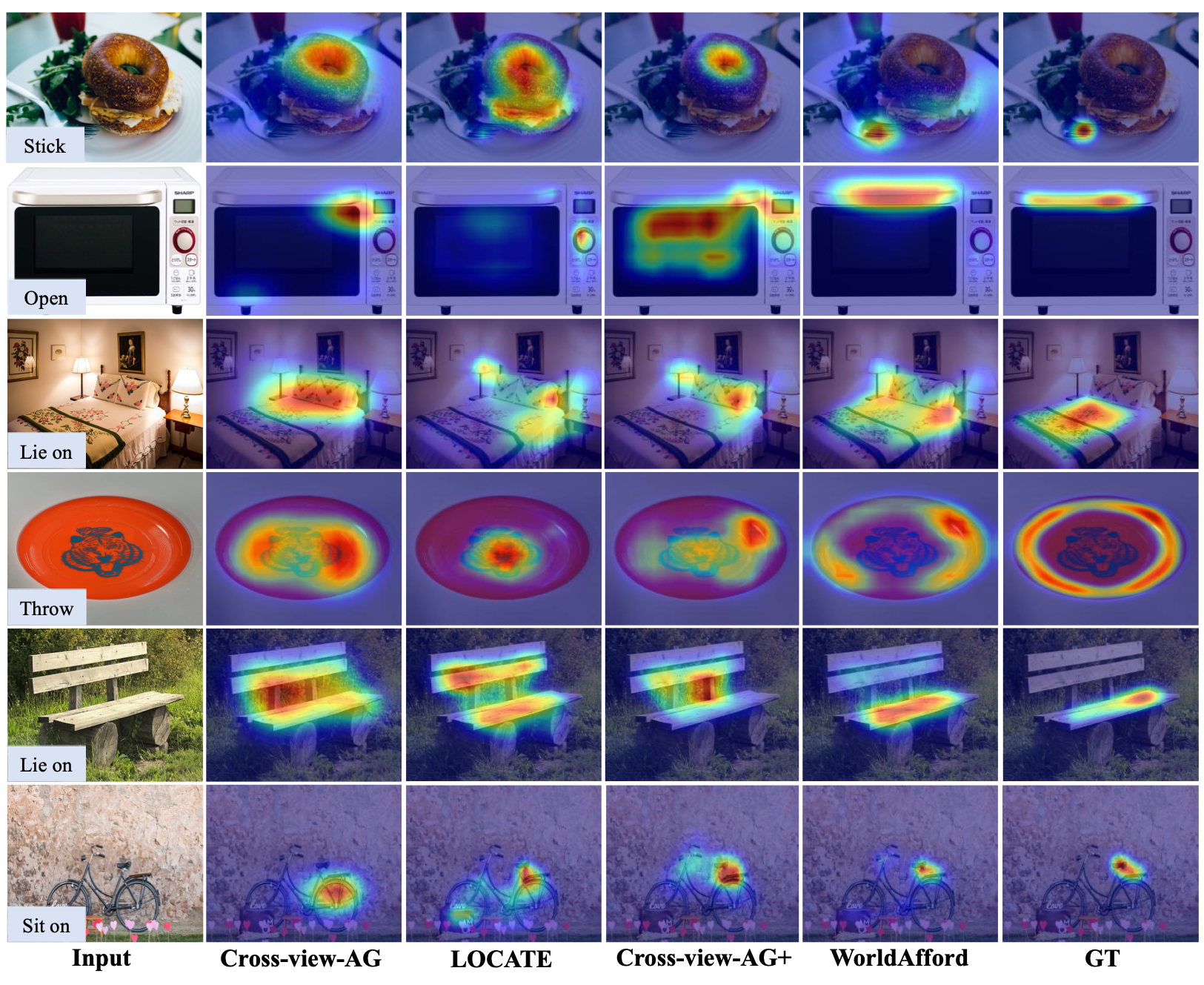

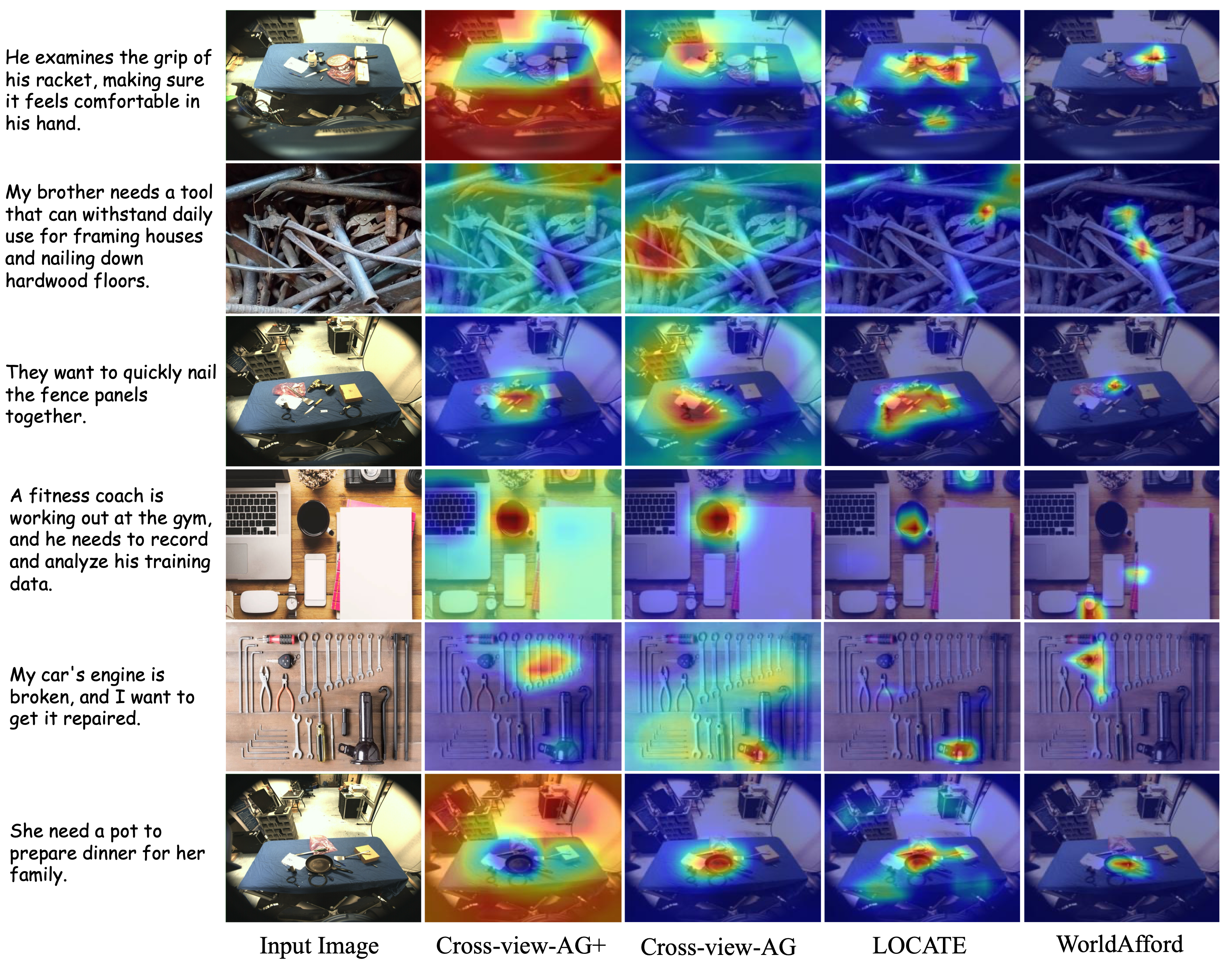

Affordance grounding aims to localize the interaction regions for the manipulated objects in the scene image according to given instructions, which is essential for Embodied AI and manipulation tasks. A critical challenge in affordance grounding is that the embodied agent should understand human instructions and analyze which tools in the environment can be used, as well as how to use these tools to accomplish the instructions. Most recent works primarily supports simple action labels as input instructions for localizing affordance regions, failing to capture complex human objectives. Moreover, these approaches typically identify affordance regions of only a single object in object-centric images, ignoring the object context and struggling to localize affordance regions of multiple objects in complex scenes for practical applications. To address this concern, for the first time, we introduce a new task of affordance grounding based on natural language instructions, extending it from previously using simple labels for complex human instructions. For this new task, we propose a new framework, WorldAfford. We design a novel Affordance Reasoning Chain-of-Thought Prompting to reason about affordance knowledge from LLMs more precisely and logically. Subsequently, we use SAM and CLIP to localize the objects related to the affordance knowledge in the image. We identify the affordance regions of the objects through an affordance region localization module. To benchmark this new task and validate our framework, an affordance grounding dataset, LLMaFF, is constructed. We conduct extensive experiments to verify that WorldAfford performs state-of-the-art on both the previous AGD20K and the new LLMaFF dataset. In particular, WorldAfford can localize the affordance regions of multiple objects and provide an alternative when objects in the environment cannot fully match the given instruction.

In this paper, we introduce a new task of affordance grounding based on natural language instructions and propose a novel framework, WorldAfford. Our framework uses LLMs to process natural language instructions and employs SAM and CLIP for object segmentation and selection. We further propose a WeightedAffordance Grouding Context Broadcasting module, allowing WorldAfford to localize affordance regions of multiple objects. Additionally, we present a new dataset, LLMaFF, to benchmark this task. The experimental results demonstrate that WorldAfford outperforms the other state-of-the-art methods for affordance grounding on both the AGD20K dataset and the new LLMaFF dataset.

@article{chen2024worldafford,

title={WorldAfford: Affordance Grounding based on Natural Language Instructions},

author={Chen, Changmao and Cong, Yuren and Kan, Zhen},

journal={arXiv preprint arXiv:2405.12461},

year={2024}

}